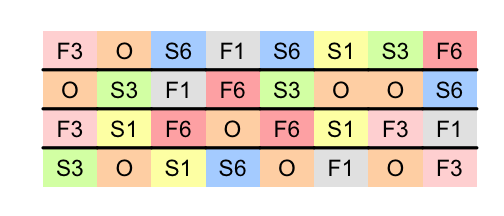

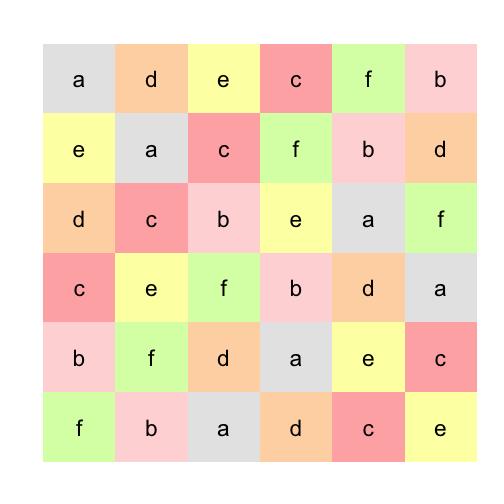

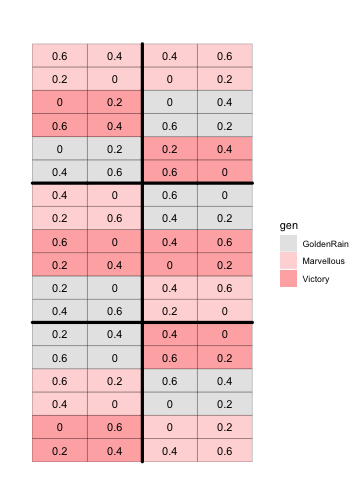

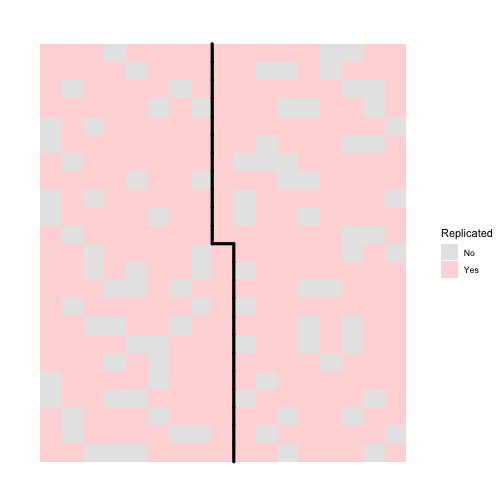

class: split-60 title-slide2 with-border white background-image: url("images/bg5.jpg") background-size: cover .column.shade_main[.content[ <br><br> # Statistical Methods for Omics Assisted Breeding ## <b style='color:#FFEB3B'>Experimental Design</b> ### <br>Emi Tanaka <br>emi.tanaka@sydney.edu.au <br>School of Mathematics and Statisitcs ### 2018/11/15 <br> ### <span style='font-size:14pt; color:pink!important'>These slides may take a while to render properly. You can find the pdf <a href="https://www.dropbox.com/s/j44mglhm5j8iek6/day4-session03-expdesign.pdf?dl=1">here</a>.</span> <br><br> ### <a rel='license' href='http://creativecommons.org/licenses/by-sa/4.0/'><img alt='Creative Commons License' style='border-width:0; width:30pt' src='images/cc.svg' /><img alt='Creative Commons License' style='border-width:0; width:20pt' src='images/by.svg' /><img alt='Creative Commons License' style='border-width:0; width:20pt' src='images/sa.svg' /></a><span style='font-size:10pt'> This work by <span xmlns:cc='http://creativecommons.org/ns#' property='cc:attributionName'>Emi Tanaka</span> is licensed under a <a rel='license' href='http://creativecommons.org/licenses/by-sa/4.0/'>Creative Commons Attribution-ShareAlike 4.0 International License</a>.</span> ]] .column[.content[ ]] <img src="images/USydLogo-white.svg" style="position:absolute; top:80%; left:40%;width:200px"> --- class: bg-main1 split-30 hide-slide-number .column.bg-main3[ ] .column.slide-in-right[ .sliderbox.bg-main2.vmiddle[ # .font_large[Stages in a Designed Experiment] ]] --- class: split-90 with-border .column[.content[ # Stages for a designed experiment 1. **Planning** - What is the aim? What are the factors to consider? How to measure? And so on. 1. .indigo[**Statistical design**] <span><i class="fas fa-arrow-left faa-horizontal animated "></i></span> .indigo[What we are going to concentrate on later!] 1. **Data collection** - This is really important! Remember the GIGO principle: Garbage In Garbage Out. 1. **Data scrutiny** - Important to do before performing statistical modelling. 1. **Analysis** - Mostly what we concentrated on so far. 1. **Interpretation** - Important to get expert opinions. {{content}} .bottom_abs.width100.font_sm90[ Bailey (2008) Design of Comparative Experiments. ]]] .column.bg-blue[.content[ ]] -- <span class=" faa-tada animated " style=" color:green; display: -moz-inline-stack; display: inline-block; transform: rotate(0deg);">It's important to think of the whole experiment holistically at each stage.</span> --- class: split-60 with-border .column[.content[ # Planning ## .pink[What is the aim of the experiment?] * Choice of .indigo[treatments] is a key step. Make sure you examine the set of treatments in the experiment in relation to the aim of the experiment. * Discuss all aspects of the experiment, e.g. constraint, data collection, what may go wrong, how much observation could be lost, what are factors that need to be controlled for, etc. ]] .column.bg-blue[.content[ ## Examples * In treatment of a disease, an already existing effective therapy exists. It is unethical to ‘do nothing’ in an experiment.<br> In this case the treatments should be the new therapy and the existing therapy * In a field test, the seeds of new breeding lines may be limited and thus you will not be able to have as many replication or test it at many different locations as you want. ]] --- class: split-1-2-1 with-border .column.bg-blue[.content[ ]] .column[.content[ # Statistical Design <br><br><br> The key to a statistically efficient design is optimisation in terms of providing a .indigo[valid framework], with .indigo[sufficient power] using the resources available and .indigo[accommodating the practical constraints]. <br><br><br> More on this later. ]] .column.bg-blue[.content[ ]] --- class: split-60 with-border .column[.content[ # Data Collection * A clear outline of the data to be collected should be discussed. * *Some potential mistake*: Recording data using a poor metric e.g. rating scales are typically coarse and not linear. <br> **Example**: if students' works is marked as Great, Good, Pass, Fail then how would you distinguish the top student among all those marked as Great? * Data collection should *not* be just left to casual or junior workers. * Any events related to the experiment be recorded as much as you can. * .indigo[Data should be in the form such that each row corresponds to one observational unit.] ]] .column.bg-blue[.content[ * Do not copy and paste data across spreadsheets. It introduces room for human error. * Any modification such as transformation is best done in a code which leaves a tracable record of your actions. * Once data is recorded, it should be protected from changes and if a new version or update comes, keep the record of the old data. .bottom_abs.bg_sm70[ **Reference for next two slides**: Broman & Woo (2018) Data Organization in Spreadsheets. *The American Statistician* **72**(1) 2-10 ] ]] --- class: split-10 .row[.content[ # Make it into a rectangle: Example 1 ]] .row[ .split-20[ .row[.content.center[ <div> <span style="vertical-align: middle;">Not good:</span> <img src="images/spreadsheet-bad1.png" style="vertical-align: middle;"/> </div> ]] .row[.content.center[ <br> <div> <span style="vertical-align: middle;">Good:</span> <img src="images/spreadsheet-good1.png" style="vertical-align: middle;"/> </div> ]]]] --- class: split-10 .row[.content[ # Make it into a rectangle: Example 2 ]] .row[ .split-40[ .row[.content.center[ <div> <span style="vertical-align: middle;">Not good:</span> <img src="images/spreadsheet-bad2.png" style="vertical-align: middle;"/> </div> ]] .row[.content.center[ <br> <div> <span style="vertical-align: middle;">Good:</span> <img src="images/spreadsheet-good2.png" style="vertical-align: middle;"/> </div> ]]]] --- class: split-90 with-border .column[.content[ # Data Scrutiny * Again, data that has been manipulated (e.g. reordered, transformed) should be avoided unless there's a good reason for the manipulation (e.g. SNP data is pre-processed before modelling but that is usually necessary because of the sheer size and noise of the original data). * .pink[Raw form of the data] should be used for analysis. Any manipulation that is needed should be coded so there is a record of the steps taken. * Be aware of rounding or significant figures. Preserve the original measure. * Check that the variables are in plausible range or values. E.g. do you have a negative yield? Are subset of measurements in different scales? * Check for outliers. * Treatment should be randomly allocated to experimental units (definition later). It is a good idea to give a quick check that treatment appears to be randomly allocated. ]] .column.bg-blue[.content[ ]] --- class: split-50 with-border .column[.content.font_sm90[ # Analysis * You need to have an idea of how you will analyse it before you have the data. * .pink[Example: Potato scab infection with sulfur treatments]. The experiment is conducted to investigate the effect of sulfur on controlling scab disease in potatoes. There were seven treatments. Control, plus spring and fall application of 300, 600, 1200 lbs/acre of sulfur. The infection as a percent of the surface area covered with scab will be measured. There are 8 replication of control and 4 replications of other treatments. Treatments are randomised within rows. Description based on `cochran.crd` from `agridat`. ]] .column[.content[ <!-- --> ### Planned Analysis ```r fit <- asreml(inf ~ trt, random=~row) fit <- lmer(inf ~ trt + (1|row)) #lme4 fit <- aov(inf ~ trt + Error(row)) #aov ``` Your analysis may change as you may find other trends or sources of variation in the data but .indigo[there should be a plan of the analysis before conducting the experiment]. ]] --- class: bg-main1 split-30 hide-slide-number .column.bg-main3[ .bottom_abs.width100.gray[ Bailey (2008) Design of Comparative Experiments. ]] .column.slide-in-right[ .sliderbox.bg-main2.vmiddle[ # .font_large[Experimental Design] ]] --- class: split-50 with-border .column[.content[ # Definitions * A .indigo[treatment] is the description of the set of different experimental conditions to be tested. * An .indigo[experimental unit] (EU) is the smallest division of the experimental material such that any two units may receive different treatments in the actual experiment. * An .indigo[observational unit] (OU) is the smallest unit on which a response is measured. * The .indigo[design] is the allocation of treatments to EUs (hence OUs). * A design is .indigo[complete] if each treatment appears the within each block and .indigo[balanced] if each treatment appears the same number of times. ]] .column.bg-blue[.content[ # Example: Tomatoes Different varieties of tomato are grown in pots, with different composts and different amounts of water. Each plant is supported on a vertical stick until it is 1.5 metres high, then all further new growth is wound around a horizontal rail (within the same pot). Groups of five adjacent plants are wound around the same rail. When the tomatoes are ripe they are harvested and the weight of saleable tomatoes per rail is recorded. * **Treatment**: Variety-compost-water combination * **Experimental Unit**: Pots * **Observational Unit**: Rails ]] --- class: split-50 with-border .column[.content[ # Blocking Blocks are to divide the set of EUs into alike units. * If done well, blocking can lower the variance of treatment contrasts which increase power. * Blocking is not necessary always good if it proves to be unnecessary! It can reduce the residual degrees of freedom which can decrease power if the sample size is small. * A non-homogeneous block can decrease power of the experiment. ]] .column.bg-blue[.content[ # Type of Blocking * .yellow[Natural discrete divisions] between EUs. E.g. in an experiment with people, the sexes make an obvious block. * .yellow[Continuous gradients] if the experiment is spread out in time or space then there will probably be continuous underlying trends but no natural boundaries. * E.g. OUs can be grouped into OUs which are contiguous in time or space. * E.g. common in agricultural field trials where underlying trend may be changes in fertility. Choices of block boundary may be somewhat arbitrary. ]] --- class: split-40 with-border .column[.content.font_sm90[ # How to block? * If possible, blocks should all have the same size. * If possible, blocks should be big enough to allow each treatment to occur at least once in each block. * Natural discrete blocks should always be used once they have been recognised. ]] .column.bg-blue[.content.font_sm90[ # Replication Increasing the replication generally increase the power but also generally increase the cost of the experiment. * .yellow[Pseudo-replication] or .yellow[false replication] describes a situation in which multiple measurements are taken from each experimental unit. In life-sciences, it is often important to make a distinction between technical and biological replication. * .indigo[Technical replication] occurs when several measurements are taken from the same biological material. Technical replication is always a pseudo-replication. * .indigo[Biological replication] occurs when measurements are taken from several independent biological subjects. ]] --- class: split-40 with-border .column[.content.font_sm90[ # Pseudo-replication Two controlled cabinets, one at 10<sup>o</sup>C and one at 20<sup>o</sup>C, each containing eight seed trays, with different watering regimes (A and B) each applied to four trays chosen at random within each cabinet. The average growth of the seedlings in each tray are measured. <center> <img src="images/splitplot.png" width="70%"/> </center> ]] .column.bg-blue.font_sm90[.content[ ## Is the replication of temperature treatment 1 or 8? {{content}} ]] -- ```r summary(aov(Length ~ Temp*Water + Error(Cabinet/Tray), data=dat)) ``` ``` Error: Cabinet Df Sum Sq Mean Sq Temp 1 115 115 Error: Cabinet:Tray Df Sum Sq Mean Sq F value Pr(>F) Water 1 152.07 152.07 39.128 4.22e-05 *** Temp:Water 1 2.54 2.54 0.654 0.435 Residuals 12 46.64 3.89 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` The temperature treatment is <span class="yellow">unreplicated</span> and there is no residual degrees of freedom for the cabinet stratum. --- class: split-40 with-border .column[.content.font_sm90[ # Pseudo-replication Two controlled cabinets, one at 10<sup>o</sup>C and one at 20<sup>o</sup>C, each containing eight seed trays, with different watering regimes (A and B) each applied to four trays chosen at random within each cabinet. The average growth of the seedlings in each tray are measured. The experiment was repeated with another two controlled cabinets. <img src="images/splitplot2.png" width="100%"/> ]] .column.bg-blue.font_sm90[.content[ ```r summary(aov(Length ~ Temp*Water + Error(Experiment/Cabinet/Tray), data=dat)) ``` ``` Error: Experiment Df Sum Sq Mean Sq F value Pr(>F) Residuals 1 49.93 49.93 Error: Experiment:Cabinet Df Sum Sq Mean Sq F value Pr(>F) Temp 1 203.91 203.91 259.5 0.0395 * Residuals 1 0.79 0.79 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Error: Experiment:Cabinet:Tray Df Sum Sq Mean Sq F value Pr(>F) Water 1 306.34 306.34 86.055 9.89e-10 *** Temp:Water 1 4.69 4.69 1.317 0.262 Residuals 26 92.56 3.56 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ]] --- class: split-60 with-border .column[.content.font_sm90[ # Randomisation Treatments are randomly applied to the experimental units. # How to randomise? You can use R. E.g. 3 treatment with 2 replication: ```r des <- expand.grid(Unit=1:3, Block=1:2) set.seed(1) # arbitrary number; sample from same random instance des$Trt <- as.vector(replicate(2, sample(c("A", "B", "C")))) des ``` ``` ## Unit Block Trt ## 1 1 1 A ## 2 2 1 C ## 3 3 1 B ## 4 1 2 C ## 5 2 2 A ## 6 3 2 B ``` ]] .column.bg-blue[.content[ ## Why randomise? Bias, bias, bias... * .yellow[Systematic bias], for example, doing all the tests on treatment A in January then all the tests on treatment B in March; * .yellow[Selection bias] for example, choosing the most healthy patients for the treatment that you are trying to prove is best; and * .yellow[Accidental bias] for example, using the first rats that the animal handler takes out of the cage for one treatment and the last rats for the other. ]] --- class: bg-main1 split-30 hide-slide-number .column.bg-main3[ .bottom_abs.width100.gray[ Caution: an experimental design should be tailored to the experiment rather than selecting a named design without much consideration of the experiment. ]] .column.slide-in-right[ .sliderbox.bg-main2.vmiddle[ # .font_large[Some List of Named Designs] ] ] --- class: split-60 with-border .column[.content[ # Randomised Complete Block Design * Every block contains each of the treatment. * The RCBD has the highest .indigo[efficiency factor] of 1. ### Example: RCBD with 8 treatments each with 4 replications and 4 blocks <img src="day4-session03-expdesign_files/figure-html/unnamed-chunk-9-1.png" style="display: block; margin: auto;" /> ]] .column.bg-blue[.content[ * .yellow[Efficiency factor] is the ratio of the variance of treatment estimator of a design `\(\mathcal{D}\)` to the variance of treatment estimator of the randomised complete block design with the same design `\(\mathcal{D}\)` and lies between 0 and 1 (inclusive). * Planned analysis: ```r asreml(y ~ trt, random=~block) ``` * For `\(\boldsymbol{y} = \textbf{X}\boldsymbol{\tau} + \textbf{Z}\boldsymbol{u} + \boldsymbol{e}\)` then `$$\text{var}(\hat{\boldsymbol{\tau}})=(\textbf{X}^\top\textbf{V}^{-1}\textbf{X})^{-}.$$` ]] --- class: split-90 with-border .column[.content[ # Incomplete Block Design * Incomplete block design could be by design or in some cases you start with a complete design but you have some missing response at the end. <img src="day4-session03-expdesign_files/figure-html/unnamed-chunk-11-1.png" style="display: block; margin: auto;" /> Most designs used in practice (at least in Australia) are incomplete. ]] .column.bg-blue[.content[ ]] --- class: split-60 with-border .column[.content[ # Lattice Square Design Lattice square design is a square array with two blocking factors that are perpendicular to each other. <!-- --> ]] .column.bg-blue[.content[ * **Data**: Six wheat plots were sampled by six operators and shoot heights measured. The operators sampled plots in six ordered sequences. The dependent variate was the difference between measured height and true height of the plot. * Lattice square designs are an example of .yellow[row-column design]. * Lattice square designs are like sudoku where each treatment occurs exactly once in each row and column. .bottom_abs.font_small[ Cochran and Cox (1957) Experimental Designs, 2nd ed., Wiley and Sons, New York. ]]] --- class: split-40 with-border .column[.content[ # Split Plot Design <!-- --> ]] .column.bg-blue[.content[ * Each block contains 3 main plots and each main plot is split into 4 subplots. * There are two treatments: nitrogen level and genotype. * The experimental unit for genotype is the main plot which a block (i.e. genotype is randomly sown in a main plot within a block). * The experimental unit for nitrogen level is the subplot within a main plot. * Split plot design is a special case of a .yellow[nested design]. * Caution is needed with nested design as it can give rise to .yellow[pseudo-replication]. .bottom_abs.width100.font_sm80[ K. Ryder (1981). Field plans: why the biometrician finds them useful. *Experimental Agriculture* **17** 243–256 ]]] --- class: split-60 with-border .column[.content[ # Grid Plot Design <img src="day4-session03-expdesign_files/figure-html/unnamed-chunk-14-1.png" style="display: block; margin: auto;" /> ]] .column.bg-blue[.content[ * Checks are replicated in a regular pattern with the idea to have a local control for spatial variation. * The test lines are unreplicated. * This design works for early generation variety trials where there may be constraint on the seed availability of test lines. .bottom_abs.font_sm70[ **Data**: Cullis et al. (1989). A New Procedure for the Analysis of Early Generation Variety Trials. *Journal of the Royal Statistical Society. Series C (Applied Statistics)* **38** 361-375<br> **Design**: Kempton (1982) The design and analysis of unreplicated field trials. *Vorträge für Pflanzenzüchtung* **7** 219-242 ]]] --- class: split-60 with-border .column[.content[ # Partially Replicated Design <!-- --> ]] .column.bg-blue[.content[ * Another design for early generation variety trials is partially replicated design or p-rep. * Use test lines in place of plots of check varieties. * In theory, it should increase the response to selection. .bottom_abs.font_sm80.width100[ Cullis et al. (2006) On the design of early generation variety trials with correlated data. *Journal of Agricultural, Biological, and Environmental Statistics* **11**(4) 381-393 ]]] --- class: split-80 with-border .column[.content[ # Multiphase Designs <img src="images/multiphase.png" width="100%"/> ]] .column.bg-blue[.content.vmiddle[ ## Would you randomise and have replication in later phases? {{content}} ]] -- <br><br> # You should! --- class: split-80 with-border .column[.content[ # Multiphase Designs <img src="images/multiphase1.png" width="100%"/> ]] .column.bg-blue[.content[ ]] --- class: split-80 with-border .column[.content[ # Multiphase Designs <img src="images/multiphase2.png" width="100%"/> ]] .column.bg-blue[.content[ ]] --- class: split-80 with-border .column[.content[ # Multiphase Designs <img src="images/multiphase3.png" width="100%"/> ]] .column.bg-blue[.content[ ]] --- class: split-80 with-border .column[.content[ # Multiphase Designs <img src="images/multiphase4.png" width="100%"/> ]] .column.bg-blue[.content[ ]] --- class: split-80 with-border .column[.content[ # Multiphase Designs <img src="images/multiphase5.png" width="100%"/> ]] .column.bg-blue[.content[ .font_small[ * Tanaka et al. (2017) Increased accuracy of starch granule type quantification using mixture distributions. *Plant Methods* **13** 107 * Brien, C. J. (2017). Multiphase experiments in practice: A look back. Australian & New Zealand Journal of Statistics, 59(4), 327–352. * Smith et al. (2015) Multi-phase variety trials using both composite and individual replicate samples: a model-based design approach. *The Journal of Agricultural Science* **153** (6) 1017-1029 ]]] --- class: bg-main1 split-30 hide-slide-number .column.bg-main3[ .bottom_abs.font_sm80.gray[ Butler (2013) On The Optimal Design of Experiments Under the Linear Mixed Model. *PhD Thesis* ]] .column.slide-in-right[ .sliderbox.bg-main2.vmiddle[ # .font_large[Optimal Design] ]] --- class: split-50 with-border .column[.content[ # Optimal Design * Optimal designs are a class of design that is *model-based*. * We define a model used for analysis and aim to optimise a certain objective function. * Some reasonable intial values need to be defined if it is involved in the calculation of the objective function. * The most widely used and accepted optimality criterion for choosing between designs used for genotype selection experiments is the .indigo[A-optimality criterion]. ]] .column.bg-blue[.content[ # A-optimality criterion * A design is .yellow[A-optimal] if the A-value of the design is a minimum for all designs under consideration. * The .yellow[A-value] of the design is average variance of the elementary treatment contrasts (or trace of the inverse of the information matrix). <br> <img src="images/avalue.png" width="100%"/> ]] --- class: split-60 with-border .column[.content[ # Getting the template for design * First, set up the template. * Rectangular field of 4 rows by 8 columns with 8 treatments and 4 blocks. * We want to randomise the treatment within block and ensure that the same treatment do not appear more than once in row or column. ```r template <- expand.grid(Row=1:4, Column=1:8) %>% mutate( Block=case_when( Column %in% 1:2 ~ 1, Column %in% 3:4 ~ 2, Column %in% 5:6 ~ 3, Column %in% 7:8 ~ 4), Trt=rep(LETTERS[1:8], times=4)) %>% mutate_all(as.factor) ``` ]] .column[.content[ # Design Template <iframe src="html_extras/table16.html" width="100%" height="500" scrolling="yes" seamless="seamless" frameBorder="0"> </iframe> ]] --- layout: true class: split-three with-border .column[.content[ # Initial Design <center> <img src="images/RCBD_first.png" width="100%"/> </center> ]] .column[.content[ # Random Permutation <center> <img src="images/RCBD.gif" width="100%"/> </center> ]] .column[.content[ # Final Design <center> <img src="images/RCBD_final.png" width="100%"/> </center> ]] --- class: show-100 --- class: show-110 count: false --- count: false --- class: split-80 with-border layout: false .column[.content[ # Optimal Design using `od` ```r library(od) sv <- od(fixed=~Trt, random=~ Block + Row + Column, permute=~Trt, swap=~Block, data=template, start.values=T) sapply(sv$G.param, function(x) x$variance$initial) ``` ``` Block.Block Row.Row Column.Column 0.1 0.1 0.1 ``` These values can be modified as you see fit. ```r des <- od(fixed=~Trt, random=~Block + Row + Column, permute=~Trt, G.param=sv$G.param, swap=~Block, data=template, search="random", maxit=10000) ``` ``` Initial A-value = 0.700000 (8 A-equations; rank C 7) Final A-value after 10000 iterations: 0.521978 Done optimise; elapsed = 0.32 ``` ]] .column[.content[ The randomised treatment is contained in ```r des$design ``` ``` Row Column Block Trt 1 1 1 1 F 2 2 1 1 E 3 3 1 1 H 4 4 1 1 C 5 1 2 1 D 6 2 2 1 G 7 3 2 1 A 8 4 2 1 B 9 1 3 2 C 10 2 3 2 F 11 3 3 2 G 12 4 3 2 D 13 1 4 2 E 14 2 4 2 H 15 3 4 2 B 16 4 4 2 A 17 1 5 3 G 18 2 5 3 A 19 3 5 3 F 20 4 5 3 H 21 1 6 3 B 22 2 6 3 C 23 3 6 3 D 24 4 6 3 E 25 1 7 4 H 26 2 7 4 B 27 3 7 4 C 28 4 7 4 G 29 1 8 4 A 30 2 8 4 D 31 3 8 4 E 32 4 8 4 F ``` ]] --- class: split-50 with-border .column[.content[ # Searching algorithm ## `random` * A random search just randomly permutes the treatment applied to experimental units. * It will not be necessary an exhaustive search so there's some chance that you will miss a design with a lower A-value. * The chance of missing this will be lower if you search more. ]] .column.bg-blue[.content[ # ## `tabu` * Another searching algorithm implemented in `od` is the `tabu`. * The TABU search strategy operates with a memory resident taboo search list. A unique key is generated for each design permutation vector visited, and stored in a binary tree. * Or more simply, the searching algorithm has "memory" of visited designs. * Simply replace `search="random"` with `search="tabu"`. ]] --- class: split-70 with-border .column[.content[ # Complex designs * As optimal designs are model-based, you can generate complex designs. * E.g. you can fit the genotype treatment as random and use the genomic or numerator relationship matrix. ```r des <- od(fixed=~1, random=~vm(Geno, Ainv) + ide(Geno) + Block + Row + Column, data=dat, permute=~Geno, optimize="ginv", search="tabu", maxit=30, G.param=sv$G.param) ``` * `optimize="ginv"` means that it optimizes for additive effect. * `optimize="data"` means that it optimizes for total genetic effect. * You can also fit a separable autoregressive process of order 1, etc. ]] .column.bg-blue[.content[ * Note `od` version 2 is using `asreml`-R version 4 notation while `od` version 1 is aligned more with `asreml`-R version 3. * You do need sensible variance estimates as initial values. * `od` is a *new* package and should be considered in beta. Take caution using generated design for now. * `od` version 2 does not have good documentation yet. ]] --- class: split-two .column.bg-yellow[.content.font_sm80[ # Key References * Butler et al. (2014) On the Design of Field Experiments with Correlated Treatment Effects. *Journal of Agricultural, Biological, and Environmental Statistics* **19**(4) 547-557 * Butler (2013) On The Optimal Design of Experiments Under the Linear Mixed Model. *PhD Thesis* * Coombes (2002) The Reactive TABU Search for Efficient Correlated Experimental Designs. *PhD Thesis* * Bailey (2008) Design of Comparative Experiments. ]] .column.bg-main1.white[.content[ # Notes * Experimental design is important. * Be wary of using an experimental design from a named list. The design should be tailored to the experiment. * Don't reuse the same randomised design! * Don't modify the design in the middle of the experiment. * Record everything meticuloulsly. * Don't forget the GIGO prinicple: "Gararge in, garbage out". ]] --- class: split-40 title-slide2 with-border white background-image: url("images/bg5.jpg") background-size: cover .column[.content.vmiddle[ <center> {{content}} <center> ]] .column.shade_main[.content[ <br><br> # <u>Slides</u> These slides were made using the R package [`xaringan`](https://github.com/yihui/xaringan) with the [`ninja-themes`](https://github.com/emitanaka/ninja-theme) and is available at [`bit.ly/UT-WS-expdesign`](http://bit.ly/UT-WS-expdesign). # <u>Your Turn</u> <s>Download `day4-session03-expdesign-tutorial.Rmd` here, open in RStudio, push the button "Run Document" on the top tab and work through the exercises.</s> For workshop participants, contact Emi for the tutorials. <br><br> ### <a rel='license' href='http://creativecommons.org/licenses/by-sa/4.0/'><img alt='Creative Commons License' style='border-width:0; width:30pt' src='images/cc.svg' /><img alt='Creative Commons License' style='border-width:0; width:20pt' src='images/by.svg' /><img alt='Creative Commons License' style='border-width:0; width:20pt' src='images/sa.svg' /></a><span style='font-size:10pt'> This work by <span xmlns:cc='http://creativecommons.org/ns#' property='cc:attributionName'>Emi Tanaka</span> is licensed under a <a rel='license' href='http://creativecommons.org/licenses/by-sa/4.0/'>Creative Commons Attribution-ShareAlike 4.0 International License</a>.</span> ]] <img src="images/USydLogo-white.svg" style="position:absolute; top:80%; left:80%;width:200px"> -- <div class="font_large">Thank you!</div>