Architecture of Large Language Models

Introduction to Large Language Models for Statisticians

15th October 2024

Overview of training a LLM

Corpus (raw)

Corpus (labelled)

Corpora for training data

Corpus (raw)

- LLMs are trained on large diverse datasets such as books, Wikipedia, websites, code, scientific articles and social network platforms.

- For example, LLaMA1 used:

- (67%) CommonCrawl: web archives from 2017 to 2020

- (15%) C4: pre-processed CommonCrawl

- (4.5%) GitHub: repos distributed under Apache, BSD, and MIT licenses

- (4.5%) Wikipedia: online encyclopaedia

- (4.5%) Gutenberg and Books3: books from Project Gutenberg and the Pile dataset

- (2.5%) ArXiv: scientific preprints

- (2%) Stack Overflow: a high quality Q&A website

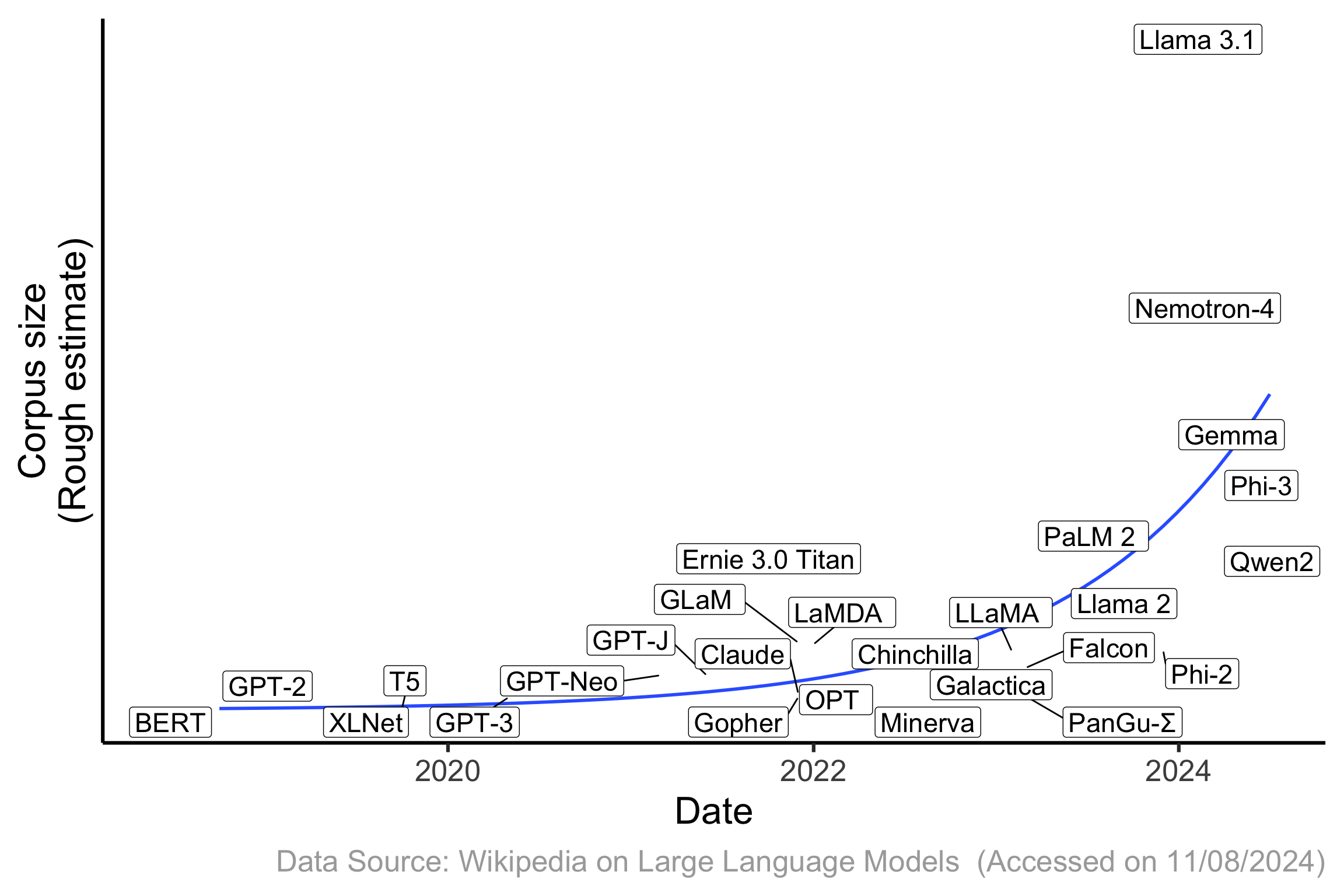

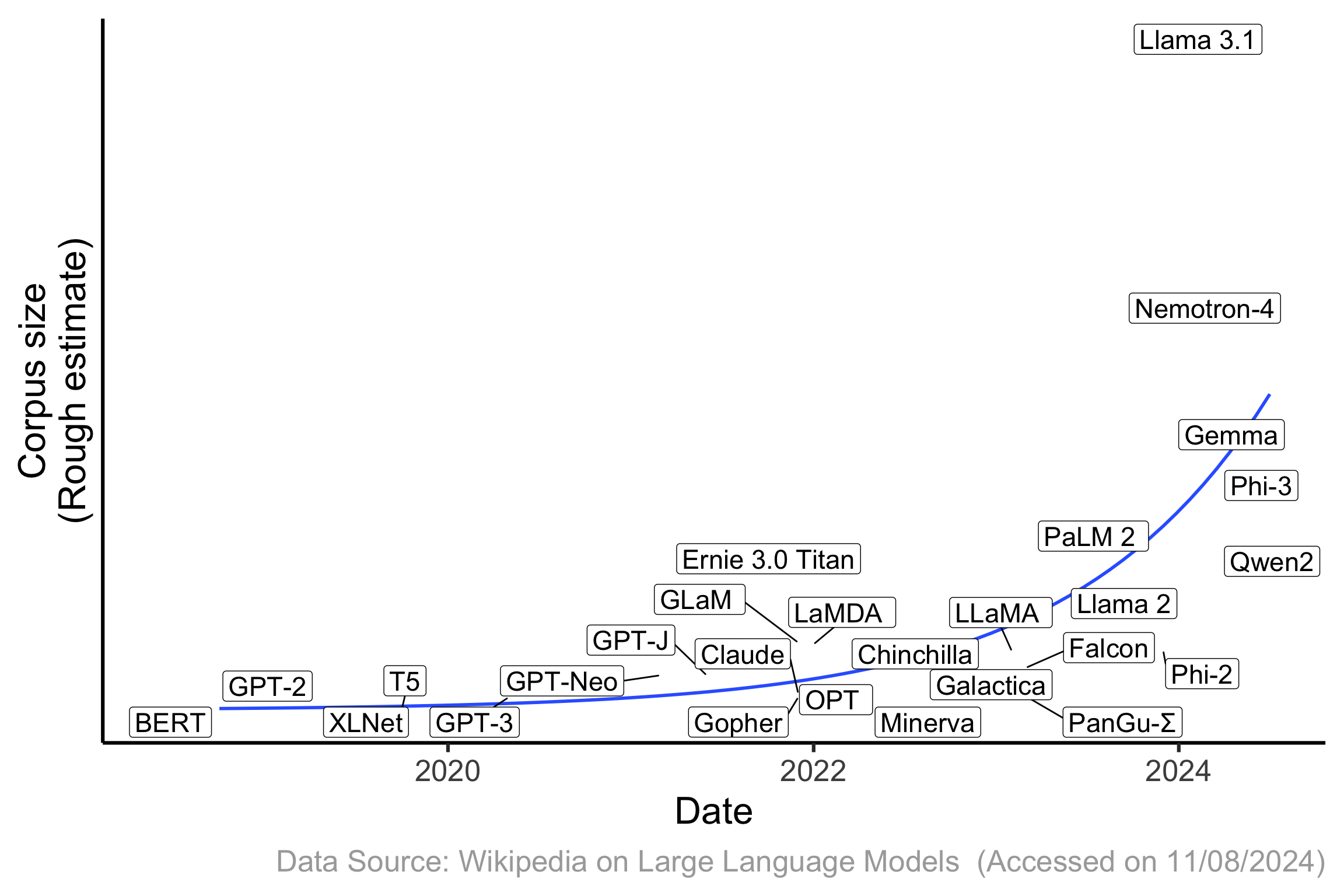

Corpus size used for training increasing over time

- Note: not all vendors publicly document their corpus size for training.

Tokenization

Input Where there’s a will, there’s a

Token Where there ’s a will , there ’s a

Token ID 11977 1354 802 261 738 11 1354 802 261

LLM

2006

way

Embeddings

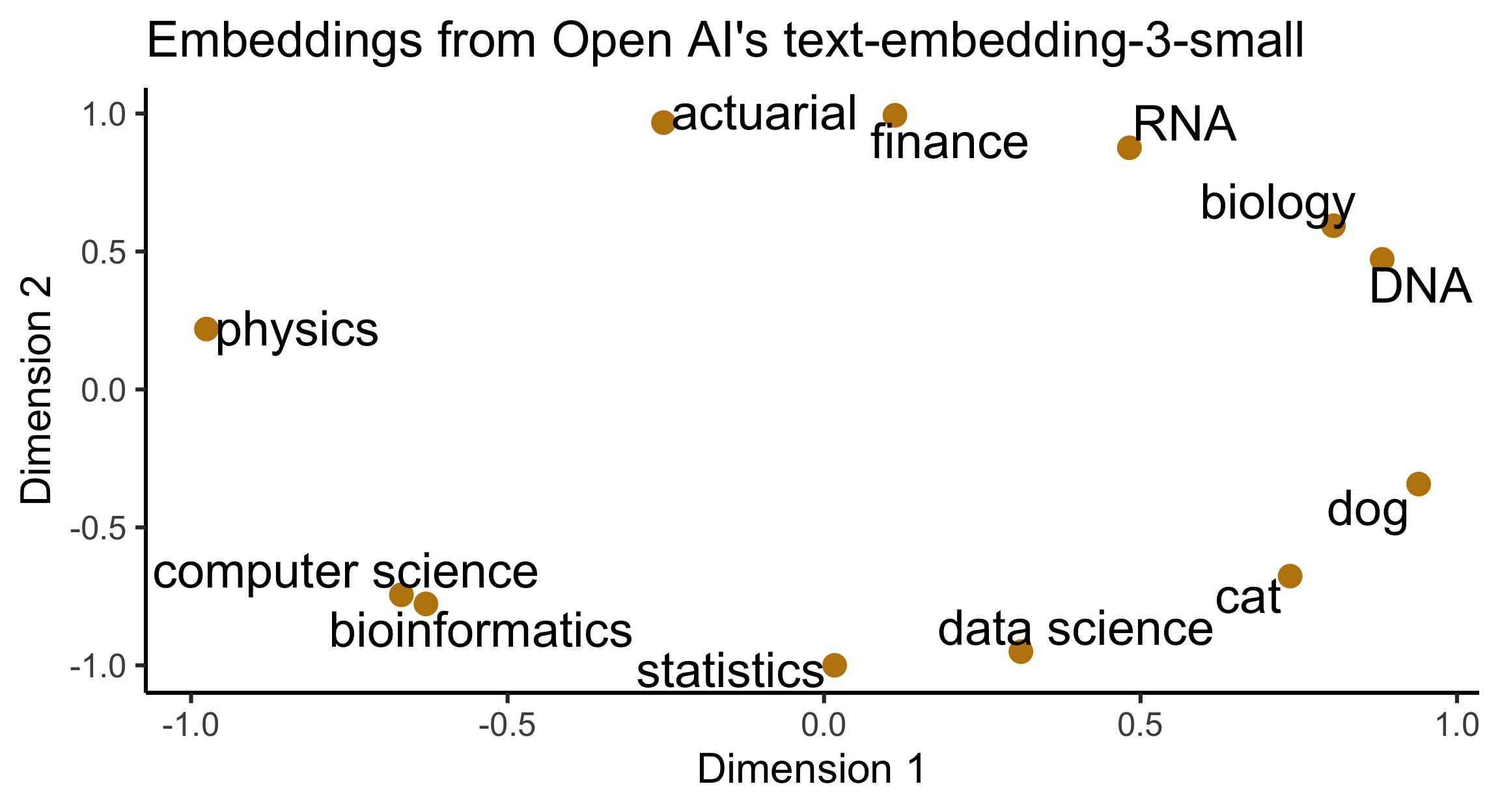

- Language modelling typically use (token) embeddings.

- Embedding models convert items to numerical vectors such that similar items are closer together than dissimilar items based on this embedding space.

- Text embedding models are often based on artificial neural networks.

Artificial neuron

The artificial neuron is an elementary unit of an artificial neural network.

The artificial neuron receives inputs \boldsymbol{x} = (x_{1}, \dots,x_{p})^\top that is typically combined as a weighted sum: z = b + \sum_{j=1}^pw_jx_{j} = b + \boldsymbol{w}^\top\boldsymbol{x}, where \boldsymbol{w} = (w_1, \dots, w_p)^\top are weights and b referred to as bias.

The z then get passed into the activation function, h(z), e.g.

- Linear: h(z) = z,

- Sigmoid: h(z) = (1+e^{-z})^{-1} (a.k.a. a logistic function), and

- ReLU: h(z) = \max(0, z).

Visualising an artifical neuron

Input layer

1

x_1

x_2

Output layer

h = ReLU

h(z)

When x_1 = 1 and x_2 = 3, then \begin{align*}z &= b + w_1x_1 + w_2 x_2\\ &= 1 + 0.5 \times 1 - 3\times 3 = -7.5.\end{align*}

Using ReLU, the prediction is \max(0, z) = 0.

Artifical neural network: regression

Input layer

1

x_1

x_2

Hidden layer

h_1 = ReLU

1

h_1(z_{11})

h_1(z_{12})

Output layer

h_2 = Linear

h_2(z_{21})

- The number of nodes in the input layer is constrained by the (modified) input data.

- The number of nodes in the output layer is constrained by the desired output (regression or classification).

Artifical neural network: classification

Input layer

1

x_1

x_2

Hidden layer

h_1 = ReLU

1

h_1(z_{11})

h_1(z_{12})

Output layer

h_2 = Softmax

h_2(\boldsymbol{z}, 1)

h_2(\boldsymbol{z}, 2)

h_2(\boldsymbol{z}, 3)

Assume classification to K classes.

Softmax:

h(\boldsymbol{z}, i) = \dfrac{\exp(z_i)}{\sum_{j=1}^K\exp(z_j)}

Output node i contains the “probability score” associated with class i.

Feed forward neural network

- Feed forward neural networks, also called deep neural networks, add more hidden layers.

- The output depends on the parameters, \boldsymbol{\theta} = (\underbrace{\boldsymbol{b}^\top}_{\text{biases}},\underbrace{\boldsymbol{w}^\top}_{\text{weights}})^\top.

- The number of parameters increases exponentially with more layers, but you gain flexibility in modelling.

- These parameters are calibrated (or trained) by:

- Defining an objective (or loss) function (like the mean square error), and

- Finding parameters \boldsymbol{\theta} that optimise this objective function (using techniques like stochastic gradient descent and backpropagation).

Embedding model: Word2Vec for illustration of an embedding process

- Word2Vec1 is one of the early unsupervised word embedding models based on a three-layer neural network using a fixed window size.

Training data: Statisticians analyse data Training mode: Continuous Bag Of Words Vocabulary size: 5 Window size: 3 Aim: Predict middle word

Statisticians

1

0

0

0

0

data

0

0

1

0

0

Input

Output

0.01

0.89

0.04

0.01

0.01

Actual:

analyse

0

1

0

0

0

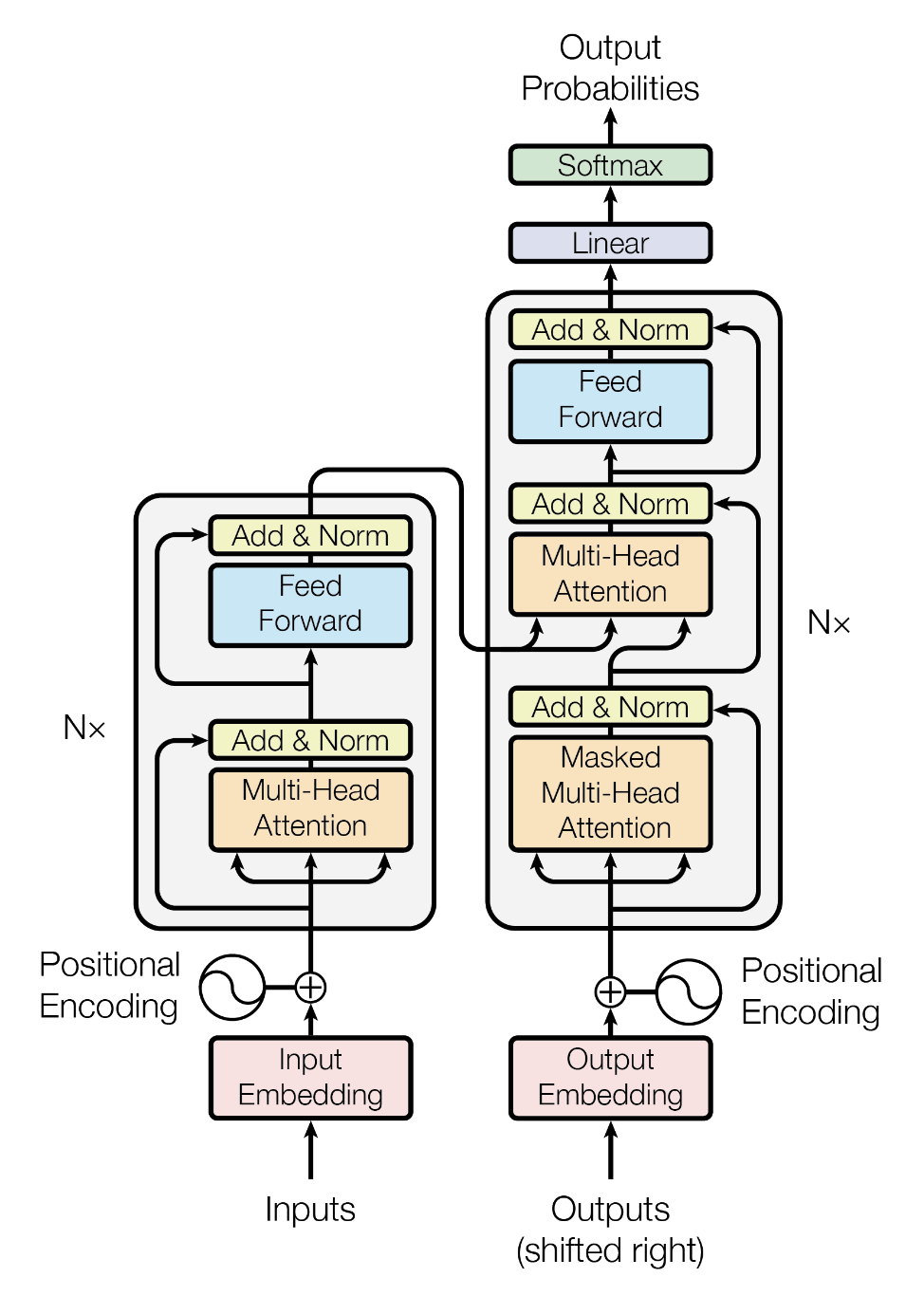

Transformer model

- Popular LLM are variants of a pre-trained transformer model.

- The original transformer model1 consist of:

- Input embeddings of current and previous positions

- Positional embeddings

- A stack of transformer blocks (N=6) with each containing:

- An attention layer with multiple attention heads

- Normalisation layers

- Feed forward neural network with 3 hidden layers:

Linear, ReLU, then Linear

- Un-embedding layer (Softmax)

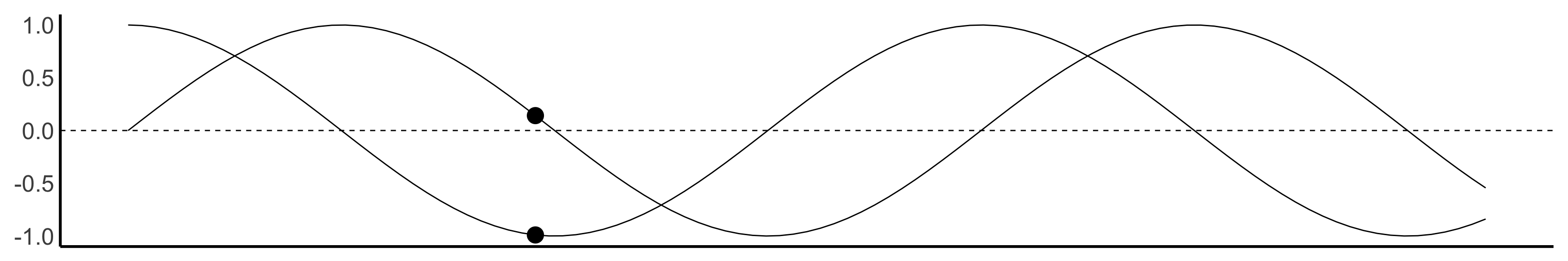

Positional embeddings

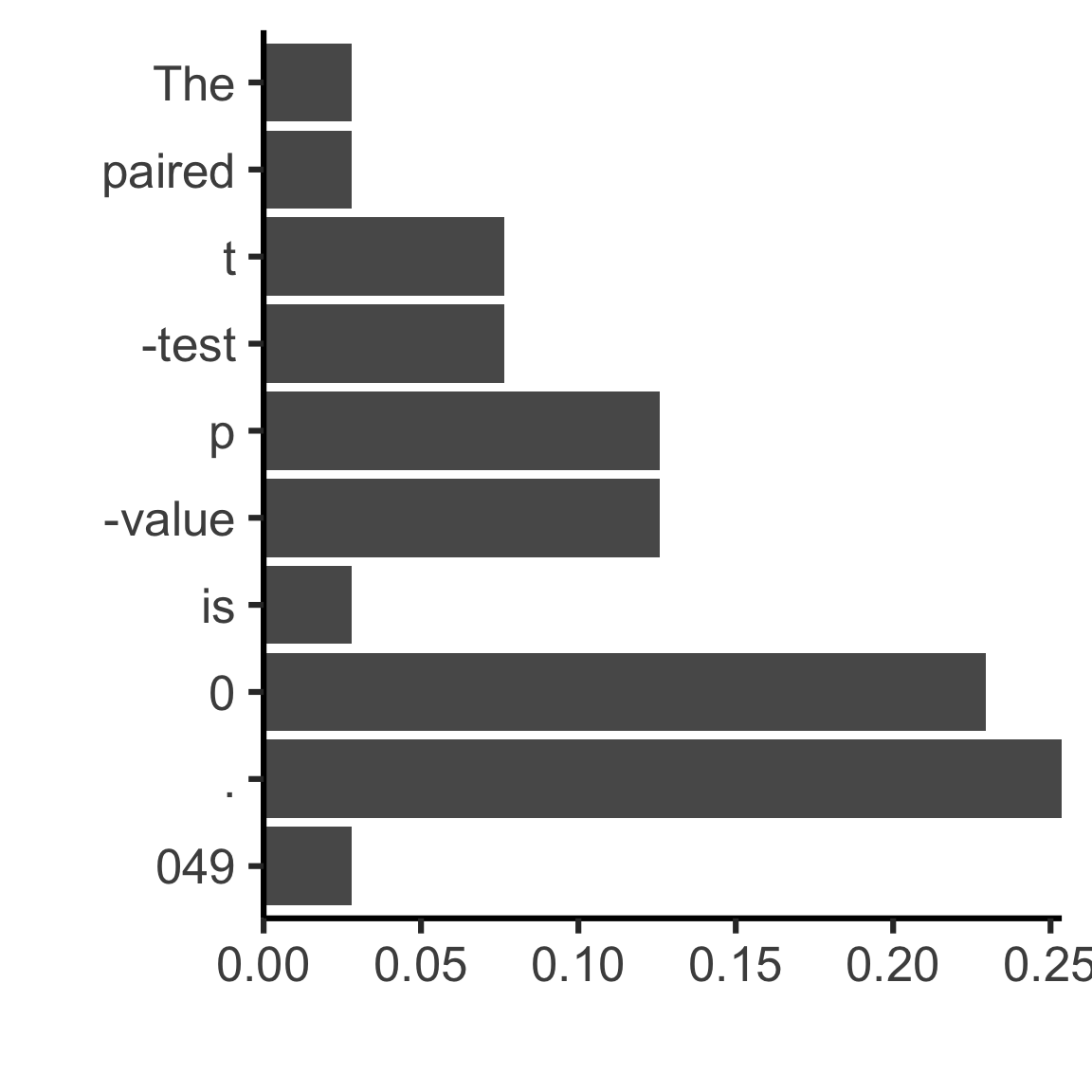

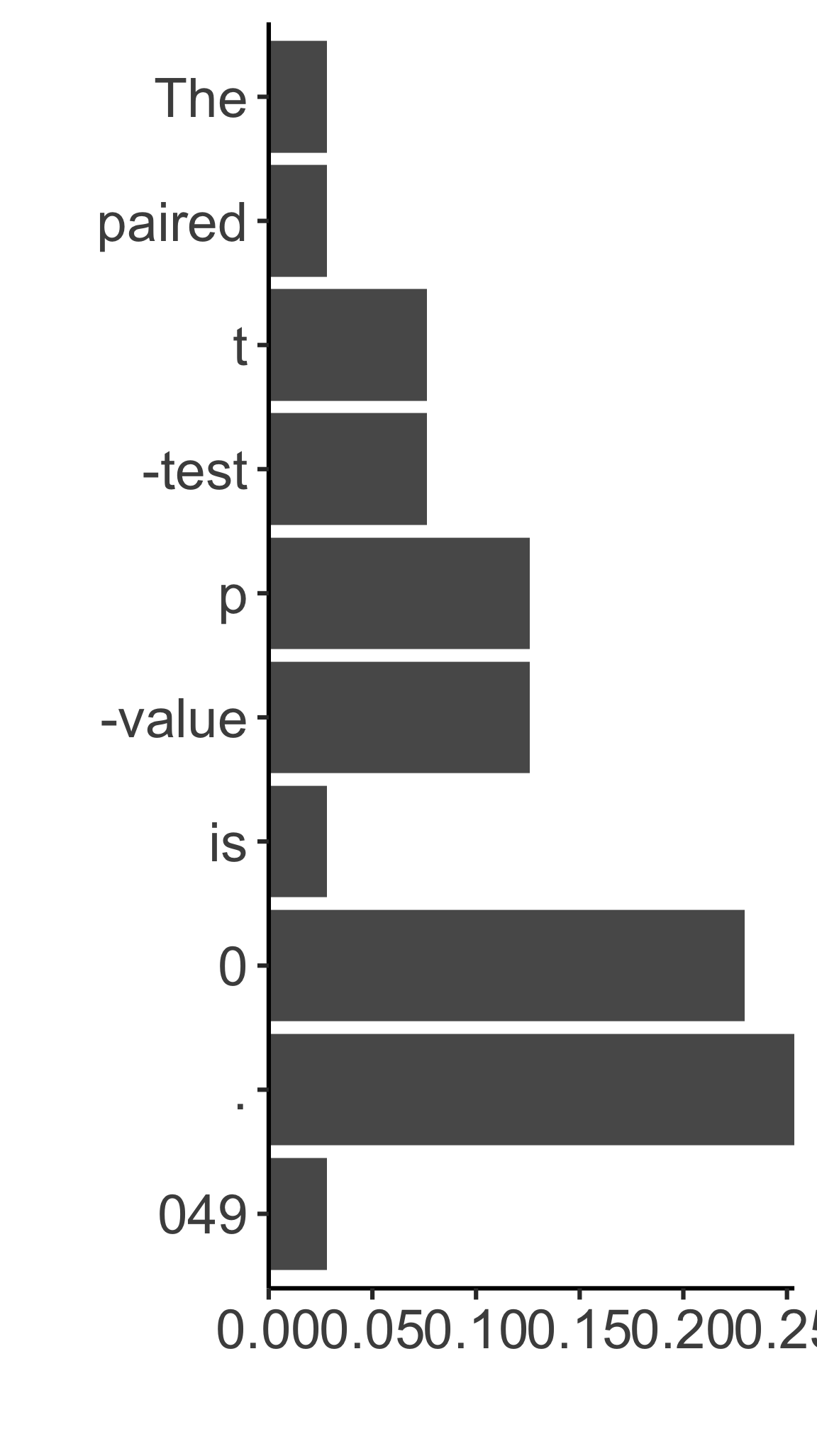

Input The paired t-test p-value is 0.049, so it is statistically significant

Token The paired t -test p -value is 0 . 049 , so it is statistically signicant

- Context length: below 10 (typically much larger, e.g. 4096 for

llama3.1:8b)

The paired t -test p -value is 0 . 049

- Transformer blocks are blind to position of token, so incorporate information about relative or absolute positions of tokens for the subsequent steps.

- Position embedding in the original transformer is represented by unique frequencies and offsets of the wave:

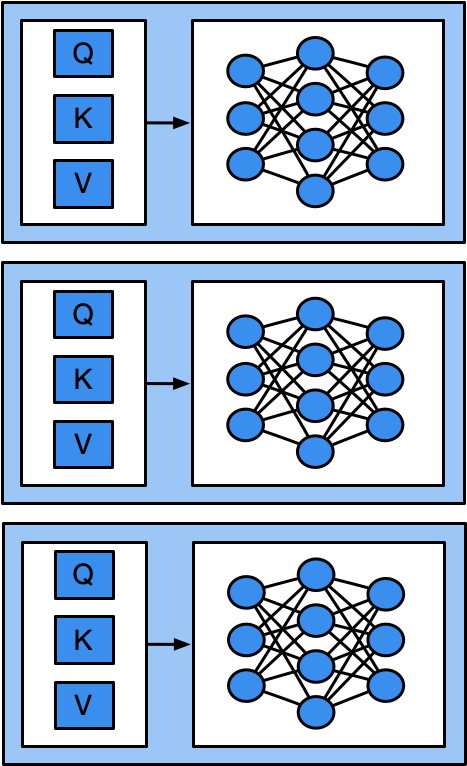

Attention head: Projection matrices

- The goal of attention layer is to incorporate relevant information of previous tokens into the current token.

The paired t -test p -value is 0 . 049

- Suppose the current position is 049 – the neural network doesn’t know what this token refers to without looking at the previous tokens.

- The attention head consists of query, key, and value projection matrices.

Attention Head: Relevance scoring

- Embedding matrix is multiplied with each of the projection matrices

\times

The query vector of the current position:

=

Result \rightarrow Softmax(Result)

Attention Head: Combining information

\times

=

Sum above and the new token embedding that incorporates relevant information from other tokens is:

Summary

Input:

All models are wrong, but some are

Updated input:

All models are wrong, but some are useful

Updated input:

All models are wrong, but some useful.

Tokenizer

Embedding layer

Transformer blocks

(artificial neural network)

Un-embedding layer

| Token | Token ID |

|---|---|

| All | 2594 |

| models | 7015 |

| are | 553 |

| wrong | 8201 |

| , | 11 |

| but | 889 |

Token

Numerical vectors

2594

7015

0553

8201

0011

0889

| Token ID | Probability |

|---|---|

| 1236 | 0.844 |

| 1991 | 0.004 |

| 12698 | 0.001 |

| ... | ... |

8316 = useful

13 = .

13 = .

XXXX = <|end|>

XXXX = <|end|>

Output: useful.

LLM

Evaluation of a LLM

Benchmark: MMLU

- Measuring Massive Multitask Language Understanding (MMLU)1 is a public benchmark containing ~16,000 multiple choice questions (4 choices) spanning 57 academic subjects.

Which three systems of the human body function together to move and control body parts?

- nervous, skeletal, and muscular

- muscular, endocrine, and excretory

- digestive, excretory, and reproductive

- circulatory, endocrine, and respiratory

Correct answer:

- An enhanced version MMLU-Pro2 containing 12,000 multiple choice questions (10 choices) across 14 domains with more challenging college-level problems.

Benchmark: MuSR

- Multistep Soft Reasoning (MuSR)1: free text narratives on murder mysteries, object placements and team allocation.

Emily took her final stroll in the park last night, forever, when her life was snuffed out under the mask of night. The cause of death was a single fatal shot from a pistol. Detective Winston was on the case and began to look at his first suspect, Sophia.

Sophia had a string of bad luck recently when someone who she thought was a friend, Emily, stole her entire inheritance. Her evening strolls in the park became franc pacing while she reconciled the fortune she lost. Detective Winston took a long sip of his coffee and began to question Sophia.

‘Quite the marksmen I see’ - pointing to a picture of her holding a recently shot buck up.

‘Yeah, my dad loved taking me shooting’ - Sophia replied sheepishly

Identify the killer. Killers have a motive, means, and opportunity …

Benchmark: HumanEval

- HumanEval1 is a dataset of hand-written coding problems.

- The evaluation of the LLM is based on the unit tests associated with the code it generates.

Example 1

You will be given a string of words separated by commas or spaces. Your task is to split the string into words and return an array of the words.

For example:

words_string(“Hi, my name is John”) == [“Hi”, “my”, “name”, “is”, “John”]

words_string(“One, two, three, four, five, six”) == [“One”, “two”, “three”, “four”, “five”, “six”]

Example 2

def is_prime(n):

"""Return true if a given number is prime, and false otherwise.

>>> is_prime(6)

False

>>> is_prime(101)

True

>>> is_prime(11)

True

>>> is_prime(13441)

True

>>> is_prime(61)

True

>>> is_prime(4)

False

>>> is_prime(1)

False

"""Other evaluations

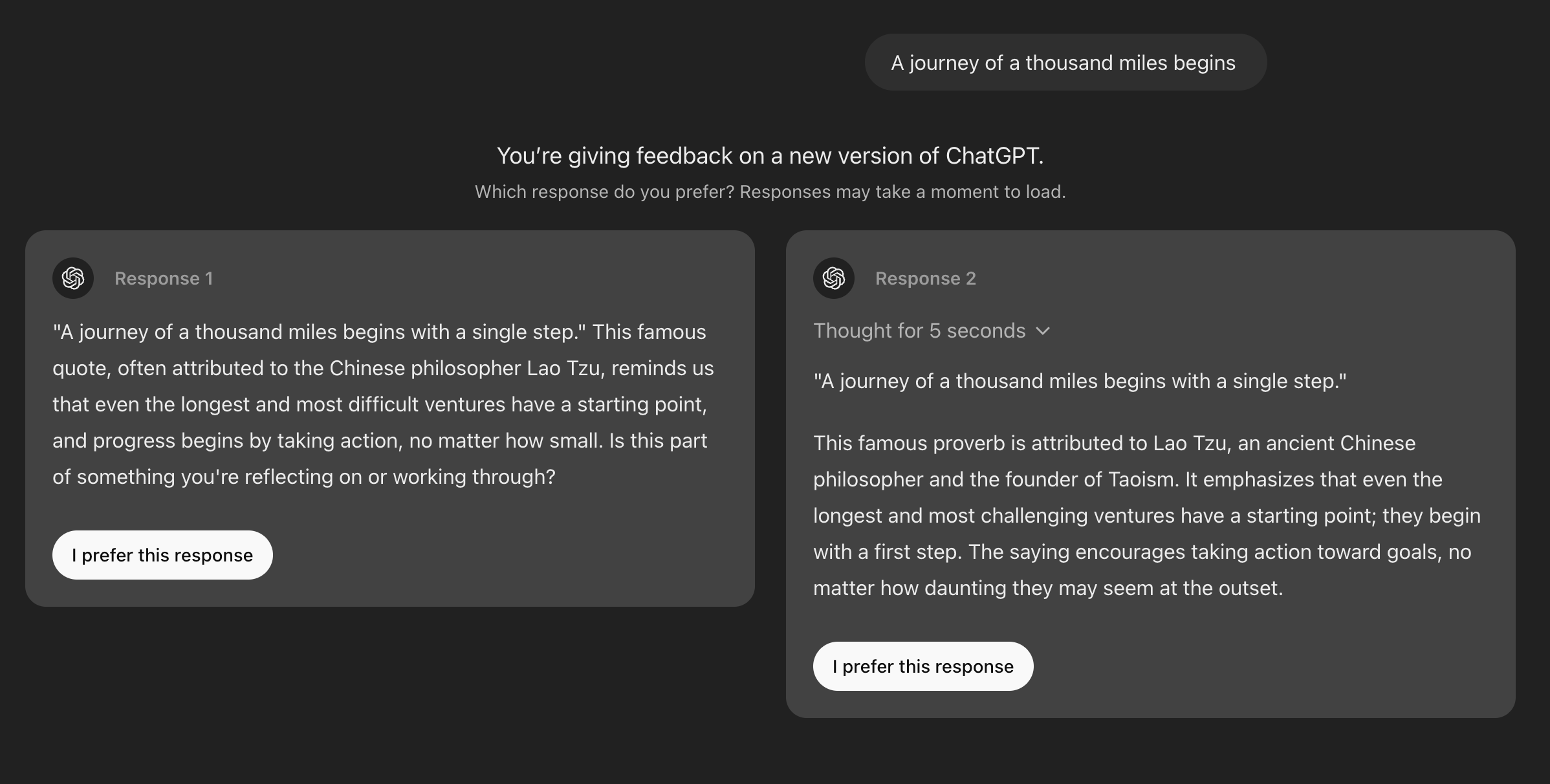

- Human evaluation can involve:

- Accept/Reject of the response

- Scoring the quality of the response on various aspects (e.g. answer relevancy, correctness, hallucination, responsible metrics, and so on).

- Pick preferred response between two responses (reinforcement learning)

- LLM evaluating LLM1: e.g. Prometheus2 uses LLM to evaluate other LLMs

Demo #3

emitanaka.org/workshop-LLM-2024/