Landscape of Large Language Models

Introduction to Large Language Models for Statisticians

15th October 2024

ChatGPT

- ChatGPT was released to public on 30th November 2022.

- ChatGPT gained a staggering 100 million active users in 2 months1.

ChatGPT Heralds an Intellectual Revolution

25 February 2023

By Henry Kissinger, Eric Schmidt, and Daniel Huttenlocher

Generative artificial intelligence presents a philosophical and practical challenge on a scale not experienced since the start of the Enlightenment.

A new technology bids to transform the human cognitive process as it has not been shaken up since the invention of printing. The technology that printed the Gutenberg Bible in 1455 made abstract human thought communicable generally and rapidly. But new technology today reverses that process. Whereas the printing press caused a profusion of modern human thought, the new technology achieves its distillation and elaboration. In the process, it creates a gap between human knowledge and human understanding. If we are to navigate this transformation successfully, new concepts of human thought and interaction with machines will need to be developed. This is the essential challenge of the Age of Artificial Intelligence.

…

Mr. Kissinger served as secretary of state, 1973-77, and White House national security adviser, 1969-75. Mr. Schmidt was CEO of Google, 2001-11 and executive chairman of Google and its successor, Alphabet Inc., 2011-17. Mr. Huttenlocher is dean of the Schwarzman College of Computing at the Massachusetts Institute of Technology. They are authors of “The Age of AI: And Our Human Future.” The authors thank Eleanor Runde for her research.

ChatGPT Demo

Chatbot timeline

Refresh of browser may be needed for timeline to render correctly

Data Source: https://en.wikipedia.org/wiki/List_of_chatbots (Accessed on 11/08/2024)

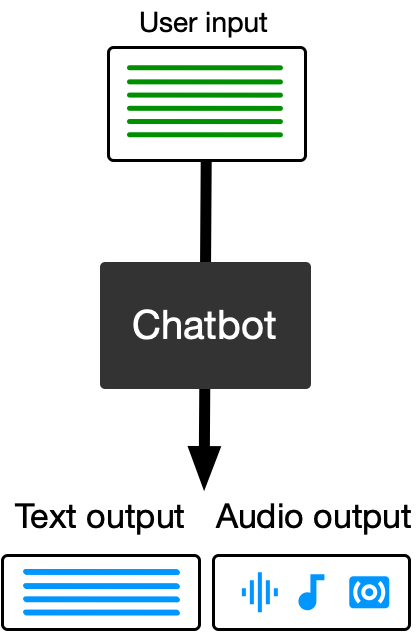

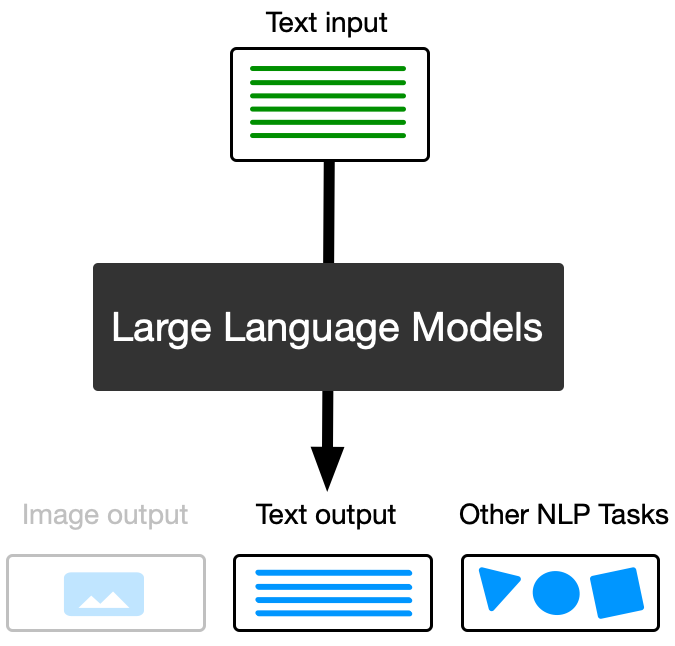

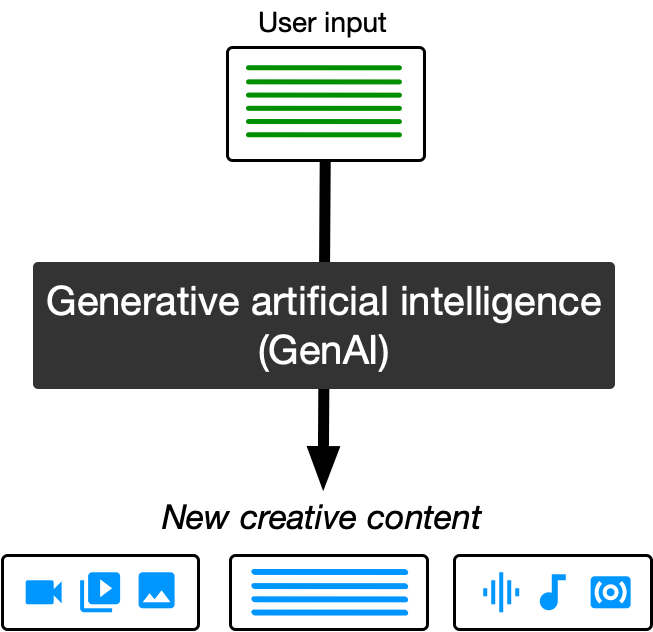

Related concepts

- Overlapping but different focuses often using related methods.

chat with user

multiple purposes

new creative content

- Note: not all LLMs power chatbots and genAI but most do.

- We’ll look at the architecture of LLM later in the workshop.

Rise of large language models

Refresh of browser may be needed for timeline to render correctly

Data Source: https://en.wikipedia.org/wiki/Large_language_model#List (Accessed on 11/08/2024)

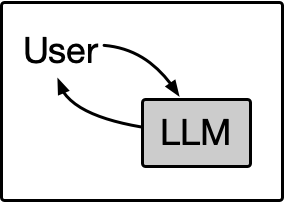

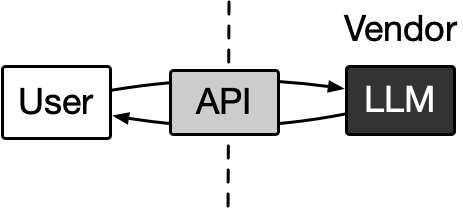

Using a LLM

Local LLM

GPT4All LM Studio Jan llama.cpp llamafile Ollama NextChat …

- No internet access required

- No account required

- Several GB of hard disk space required

- At least 16GB RAM required for 7b parameter LLMs

chatgpt-4o-latestgpt-4ogpt-4o-minigpt-3.5-turbodall-e-3text-embedding-ada-002tts-1-hdwhisper-1- …

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4o-mini",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}'![]() Ollama

Ollama

llama3.2:1bllama3.2:3bllama3.1:8bllama3.1:70bllama3.1:405bgemma2:2bgemma2:9bgemma2:27bllava:7b- …

curl http://localhost:11434/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "llama3.2:1b",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}'

- Pricing: FREE.

- The model name has a suffix with the number of parameters, e.g.

llama3.1:8bhas about 8 billion parameters. - Larger number of parameters requires larger RAM (~16GB RAM required for 7b).

Which LLM to use?

- LLM leaderboards, e.g. Open LLM Leaderboard and LLM Arena.

- Smaller number of model parameters may not perform as well for complex tasks.

- There are some specialised LLMs, e.g.

dall-e-3generates images from user text inputllava:7bis a multi-modal model and can take image inputmathstral:7bis designed for math reasoning and scientific discoverydeepseek-coder-v2:16bcomparable togpt-4-turboin code-specific tasksmeditron:7badapted fromllama2for medical domain

- Currently I use mostly:

- Open AI:

gpt-4o,gpt-4o-minianddall-e-3with payment of US$5 so far - Ollama:

llama3.1:8bandllava:7b

- Open AI:

Demo #1

Predictive model

- Large language model at its core predicts the next word given a sequence of words.

Input: All models are wrong, but some are

Updated input:

All models are wrong, but some are useful

Updated input:

All models are wrong, but some are useful.

LLM

useful

.

<|end|>

Output: useful.

Tokenization

Input Where there’s a will, there’s a

Token Where there ’s a will , there ’s a

Token ID 11977 1354 802 261 738 11 1354 802 261

LLM

2006

way

Tokens are not necessary words

- Every LLM has its own tokenizer with varying vocabulary size.

- Rule of thumb for common English text: 1 token = ~4 characters = ~0.75 words

summary summarise summarize summarising summarizations

summary summ ar ise summ ar ize summ ar ising summ ar izations

3861 141249 277 1096 750 5066 25434

Special tokens

- In practice, summarise may be tokenized as summ ##ar ##ise where “##” indicates another token prefixes it.

- Due to popularity of chat tokens since 2023, tokenizers have adapted to a conversational direction with special tokens to indicate speaker role, such as

- <|system|> – high-level instructions

- <|user|> – user (usually human) queries or prompts,

- <|assistant|> – typically the model’s response, and

- other, e.g. <|tool|> .

Token distribution

Input: Where there’s a will, there’s a

- Output is randomly sampled from likely tokens weighted by their respective probabilities.

- Original distribution:

Token Probability

2006

301

35

4443

4

…

- Small

top_p:

Token Probability

2006

301

- High

temperature

Token Probability

2006

301

35

4443

4

…

seedensures the same random sample given the same input (important for reproducibility!), but the sameseedmay not yield the same result across different systems.

Demo #2

Prompt engineering

- Prompt engineering is the process of designing and refining the prompts for a language model to generate specific types of output.

Instruction-based prompt

- You can give more specific instructions regarding the format.

Zero-shot prompt

- When LLM are given no examples of the task to carry out, this is referred to as zero-shot prompt.

Computer Science.- LLM can be fine-tuned by training on a new labelled data, but this is computationally expensive and out of scope for typical analysts.

- Prompt engineering with one- or few-shot prompt is a low cost approach to provide in-context learning.

In-context learning

- If examples of expected response are provided in the prompt, these are called one-shot prompt (if one example) or a few-shot prompt (if more than one example).

Data Science.Chain-of-thought

Chain-of-thought aims to make the LLM “think” before answering.

Reasoning is a core component of human intelligence and LLM can mimic “reasoning” from memorisation and pattern matching trained from large corpus of text.

Statistics.- Here is another example where we want to calculate the median:

3.Prompt engineering components

Some common components include:

- Instruction The task (be specific as possible).

- Format The response format. E.g. “return the value only” and “return as JSON”.

- Example Example(s) of input and expected response.

- Context Additional information about the context of the task.

- Persona Describe the role of the LLM. E.g. “You are a statistics tutor”.

- Audience Describe the target audience. E.g. “Explain it to a high school student”.

- Tone The tone of the text. E.g. “Respond professionally”.

Self-consistency

- To ensure the responses are reliable, you can generate the response to the same prompt multiple times and select the mode.

🤔 Pondering

(for later)

How do LLMs complement or hinder statistical thinking?

What role should LLMs play in decision-making processes and research?

How will LLMs impact the training and development of future statisticians and data scientists?

What are the use cases of LLM for you (if any)?

emitanaka.org/workshop-LLM-2024/

…

… Ollama

Ollama